I’m excited about Small Language Models (SLMs), which essentially means AI on your own computer or within your infrastructure. The promise is tempting: full data privacy, zero API costs, and operation on your own hardware. But how does this promise hold up when we move to building chatbots with basic RAG capabilities?

The goal of this experiment was to create an AI assistant for the internal use of a hypothetical company, capable of autonomously utilizing tools – in this case, searching a database of company documents (RAG).

During testing, I hit a wall: small models (below 10-12 billion parameters) cannot correctly handle the standard OpenAI Function Calling API, at least not within my stack of Pydantic AI + Ollama. This case study documents my journey through a series of failures until I found a partial solution – because it’s not the ultimate one.

Objective and Technology Stack

Before we dive into the tests, let’s define the problem. We wanted an AI agent, running on a local machine (via Ollama) and managed by the Pydantic AI framework, to autonomously decide when it needed information from our ChromaDB vector database.

Project Stack:

- Backend: Django 5

- Agent Framework: Pydantic AI 1.9.1

- LLM Hosting: Ollama

- Vector Database: ChromaDB 1.3.0

- Hardware: Intel i7-13700F (16 cores), 64GB RAM, RTX 4060 Ti 16GB VRAM

The key element was the search_documents_tool that the agent was supposed to call independently. For this to work, the model not only had to understand Polish (which was my initial assumption) but also the JSON format in which Pydantic AI (using the OpenAI schema) passes tool definitions and expects responses.

Test Chronology: Methodical Search for Limits

I started with the smallest models, systematically increasing their size.

Test 1: Gemma 3 Tools 2B ❌ (Format Failure)

- Model:

gemma:3-tools-2b(2 billion parameters) - Expectation: The name “Tools” suggests support for function calling.

- Result: Immediate application error:

Error: invalid message content type: <nil> - Diagnosis: The model is too small. Instead of returning a correct JSON structure or an empty response, it returned

nil. The OpenAI API format is completely incomprehensible to it.

Test 2: Mistral 7B ❌ (Logic Failure)

- Model:

mistral:7b(7 billion parameters) - Expectation: One of the most popular models, great for general tasks.

- Result:

User: Jaki jest adres firmy? AI: ```yaml search_documents_tool("adres firmy") ``` ``` - Diagnosis: Mistral understood the intent – it knew it should use a tool. However, instead of calling it via API (generating a special JSON), it “wrote” the code in the response. It treats the tool definition as plain text to mimic, not a meta-instruction to execute an action.

Test 3: Qwen3-VL 8B ⚠️ (Performance Failure)

- Model:

qwen3-vl:8b(8 billion parameters) - Expectation: A model with an additional “thinking” layer; I thought it would handle it, and it did.

- Result: Success! Logs showed:

🔍 TOOL CALLED: search_documents('firma adres') - Problem: Response time: 11 minutes and 23 seconds!

- Diagnosis: The model correctly handled function calling, but its additional “reasoning” (thinking) layer caused it to “ponder” for over 11 minutes before calling the tool. In a chat application, this is absolutely unacceptable.

Test 4: Mistral Nemo 12B ✅ (Partial Success)

- Model:

mistral-nemo:12b(12 billion parameters) - Expectation: Crossing the 10B threshold should help.

- Result:

🔍 TOOL CALLED: search_documents('firma')– it works! Response time: approx. 30 seconds. - Problem: Poor Polish language handling. Despite Polish prompts, the model responded in English or an indigestible “ponglish” mixture.

- Diagnosis: Function calling works, but the model is useless for a Polish user.

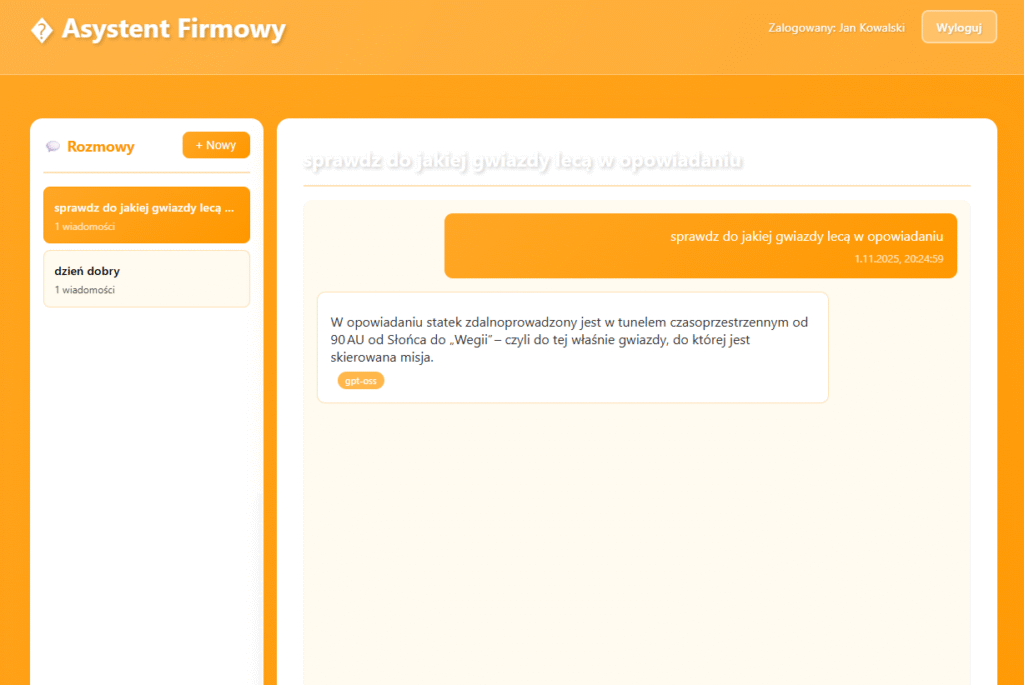

Test 5: GPT-OSS 14B ✅ (Today’s Winner)

- Model:

gpt-oss:14b(14 billion parameters) - Expectation: The last hope among medium-sized models.

- Result: Full success.

💬 NEW CHAT REQUEST Question: 'Do jakiej gwiazdy lecą w opowiadaniu?' 🔍 TOOL CALLED: search_documents_tool Query: 'gwiazda opowiadanie cel podróży' Documents: WEGA.pdf Chunks: 3 🤖 AI Response: "W opowiadaniu statek jest w tunelu czasoprzestrzennym od 90 AU od Słońca do „Wegii" – czyli do tej właśnie gwiazdy, do której jest skierowana misja." - Diagnosis:

- Function calling works perfectly.

- The model autonomously decides when to use a tool.

- Polish language is at a very good level.

- Response time (approx. 45 seconds on RTX 4060 Ti 16GB) is acceptable for an internal application.

Analysis: Why Do Small Models Fail with Function Calling?

The problem lies in the complexity of the task. The standard OpenAI Function/Tool Calling requires the model to perform three steps:

- Tool Definition Analysis: Understanding the complex JSON format provided in the system prompt.

- Decision: Deciding whether to respond directly or use one of the available tools.

- Response Generation: Creating a precise, subsequent JSON structure (instead of plain text) that instructs the system which tool to call and with what arguments.

Models below 10B parameters simply seem to lack sufficient computational “depth” to understand this meta-instruction. They perceive the tool definition as an example of text to mimic (hence search_documents_tool("adres firmy") in the response), rather than a command to perform an action.

But I’m not giving up yet. I will try to perform my test on other models; I’ve avoided Llama 3.1 so far. It has weak Polish, but I think it might work.

The table below summarizes the test results:

| Model Size | Function Calling | Response Time | Polish Language |

| 2B (Gemma) | ❌ Format Error | N/A | N/A |

| 7B (Mistral) | ❌ Writes code instead of calling | ~30s | ✅ |

| 8B (Qwen3-VL) | ✅ Works | ❌ 11 minutes | ✅ |

| 12B (Mistral Nemo) | ✅ Works | ✅ 30s | ❌ |

| 14B (GPT-OSS) | ✅ Works | ✅ 45s | ✅ |

Conclusions and Recommendations

Building AI systems is an art of compromise – in this case, between model size, its “intelligence,” and available computational power.

My tests show that if you want to build local AI agents capable of autonomously using tools (via an OpenAI-style API), the minimum entry threshold is a model with 12-14 billion parameters – at least for the current state of these tests.

Falling below this threshold leads to frustration and logical errors that cannot be fixed with simple prompt engineering.

What if you must use a small model (<10B)?

You have to abandon elegant function calling API and revert to older techniques:

- Regex Parsing: Teaching the model to return a special token (e.g.,

ACTION: SEARCH[query]), which is then extracted from the response. - ReAct Approach: Implementing a loop where the model “thinks,” “acts,” and we manually parse its responses.

- “Always Search” Approach: Less intelligent but simple – every user query is first passed through the RAG search engine, and the results are added to the context.

- Or pure JSON and handling with classic if statements and functions.

All these workarounds function, but they are less elegant and precise than native function calling.

For me, the choice fell on the gpt-oss:14b model. These methodical tests allowed me to precisely determine the limitations of available tools before building an entire system upon them.

What are your experiences with function calling on small models?

0 Comments