Almost every day, I encounter a misunderstanding of what AI is. A vast number of people see no difference between the concepts of an assistant and an agent, nor between a model and an application. Many companies and individuals, by not understanding the specific terms and how AI-related technologies work, make poor business decisions. This ends with a huge number of failed implementations and, as a result, wasted money.

That's precisely what I want to explain in simple terms in this article.

The last two years have made artificial intelligence a permanent fixture in our offices. From managers to specialists, everyone wants to implement it, promising themselves a business revolution. In this enthusiasm, mistakes are often made. I hear questions and statements like:

- "Is ChatGPT the same as AI?"

- "If we have the model, why can't it do what I want?"

- "Let the model learn from our data and make decisions in real-time."

- "Can't we just retrain it to know our company's knowledge?"

The biggest problem isn't the technology, but the lack of understanding it. This article aims to organize the basic concepts so that everyone—regardless of their position or experience—can consciously discuss AI implementations in their company.

1. AI is the umbrella, LLM is a part of it, and ChatGPT is a finished product.

This is a fundamental difference that must be understood.

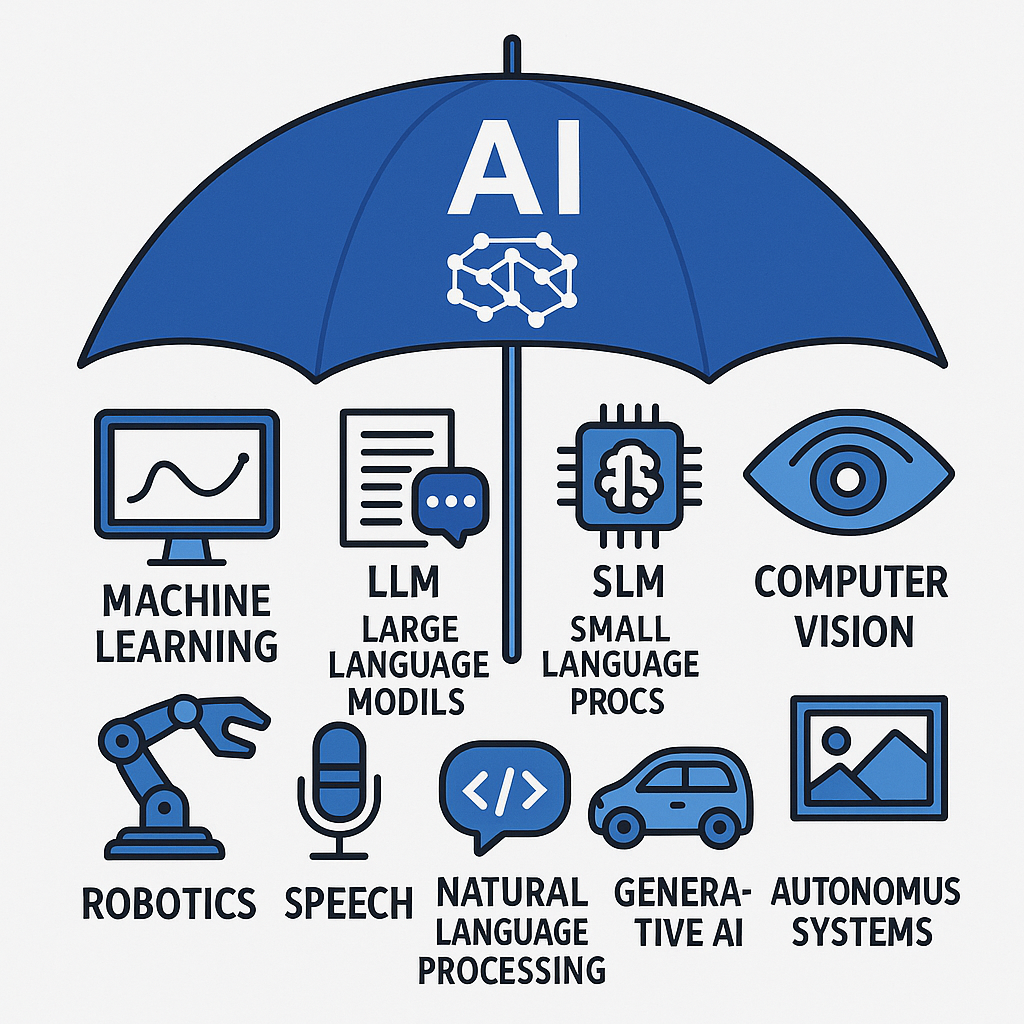

Artificial Intelligence (AI) is a broad field of science at the intersection of computer science, neurology, and psychology. In the future, I'll describe in more detail how this 'artificial brain' works 😉. It's an umbrella term that covers everything: from simple recommendation algorithms (like on Netflix) to advanced speech recognition systems, large language models (i.e., LLMs, including the well-known GPT), and multimodal models that understand and generate images and sounds in addition to text.

Large Language Models (LLMs), such as GPT, Gemini, Sonet, or our native Polish Bielik.ai (though here we have an SLM, or Small Language Model), are one of the most powerfully developing branches of AI. They are the "engines" capable of generating text, code, or summarizing data. They are raw, trained models that are not finished applications in themselves, just as a car engine is not yet a finished car.

ChatGPT, on the other hand, is a finished application. It's a product where an LLM (that "engine") has been used, wrapped in a user-friendly interface, and made available to users. It was this application that made LLMs popular.

Important: LLMs are pre-trained models. They cannot be cheaply and easily "trained" for our specific needs. The larger the model, the more new data you need to input to even slightly change its way of operating.

However, machine learning (ML) techniques can be successfully used to create much smaller, specialized models. The aforementioned Netflix learns from user data about what they like and what they might enjoy. This involves much smaller amounts of data for learning than in the case of LLMs. In a factory with machines, you can analyze data from their operation in real-time to predict failures and downtime and act proactively. Such models are created by Data Scientists, specialists in algorithms and mathematics, who can select the appropriate learning method for a specific business case. We cannot have a conversation with these models.

To summarize: AI is the whole category, LLM is a subcategory, and ChatGPT is a specific, popular example of a product based on an LLM.

2. Training, Fine-Tuning, and RAG – three different elements that affect a model's "knowledge."

What we colloquially call "retraining AI" in the sense of providing knowledge to LLMs can actually mean several different processes. Choosing the right one is crucial for a project's success.

Training from scratch: This is the process of creating a model from the ground up. It involves feeding it huge, diverse datasets (terabytes of text). It is extremely expensive and time-consuming. Models like GPT or Gemini are created this way. Your company will likely never train an LLM from scratch.

Fine-Tuning: This is the process of "retraining" an existing model on a smaller, specialized dataset containing pairs of questions and answers. It doesn't directly affect "knowledge" but helps to adjust the style and manner in which the model responds. It can slightly change the weights between individual neurons.

RAG (Retrieval-Augmented Generation): This is the most practical solution for most companies. Instead of "retraining" the model, we provide it with an additional, contextual document at the moment of the query—for example, a PDF file with a company procedure. The model uses this document to answer the question precisely. It's like giving it a "cheat sheet" on the fly. RAG allows the use of general models (like ChatGPT) in the context of company-specific knowledge, without the need for costly fine-tuning.

3. Assistant vs. Agent – more than just names.

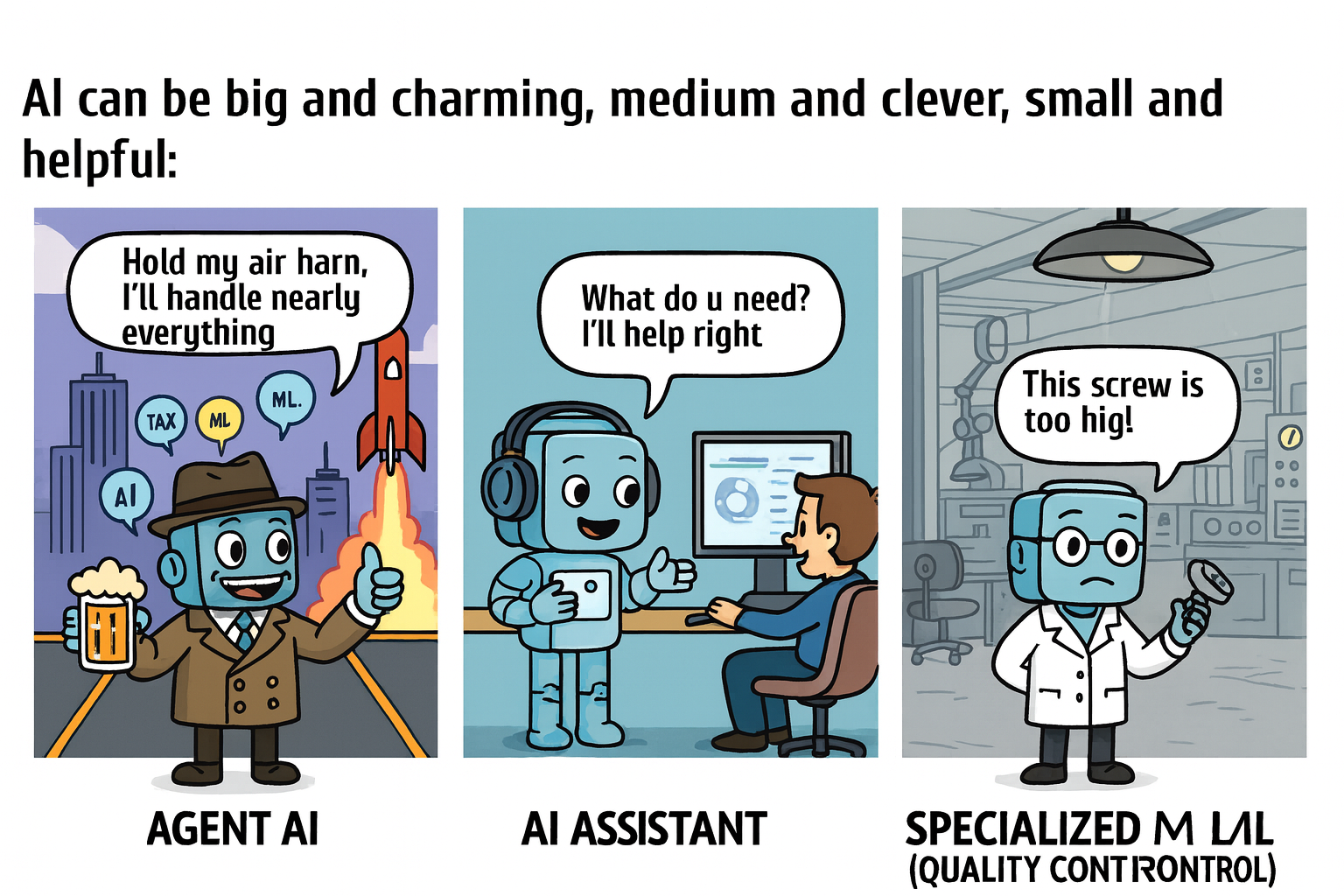

Another important distinction is the role that AI plays in a system.

Assistant: This is a passive role. An assistant answers questions and generates text, but always waits for a command from a human. A standard chatbot is an example.

Agent: This is an active role. An agent not only responds but can also independently make decisions and perform a series of actions. Its operation also starts with some trigger (e.g., a user's command or an email arriving in our inbox), but the agent tries to complete the task assigned to it in a loop, acting until it's finished.

I often hear about agents that "make calls." In fact, the part that calls and talks is a voicebot (which might use an LLM for conversation), but the decision to make the call and what to do after it is indeed the action of an agent.

4. Interaction with the environment.

Indeed, large language models have no capabilities other than accepting questions and providing answers. The input, however, can be anything—as long as it's text-based. For such a model to do something, it uses so-called "tools," which are classically programmed functions that, based on the model's output and provided parameters, perform an action (they can even control a robot).

Example: An email arrives in our inbox. We have many inboxes and no time to check them. An agent does it for us. The application reads emails every minute. When a new email arrives, its content is sent as input to the AI/LLM. The model has system information, a so-called system prompt, which might say: "When the email contains a request for a quote, respond using the reply_to_email function that an offer will be sent shortly, and use the forward_to_chat function to send the information to me via chat." And using these functions, it performs exactly those actions.

Summary

Understanding these differences is the first step towards building a strategic IT systems architecture that will truly benefit your company. AI is not magic, but an advanced tool whose potential is unlocked only when we know how to apply it correctly.

Instead of asking, "Can AI do this?", we should be asking, "Which model, in which architecture, and in which product will best solve our specific problem?". This change in perspective is the key to success in the digital age.

0 Comments